About Parlant

About Parlant

Parlant is built and maintained by a team of software engineers from Microsoft, EverC, Check Point and Dynamic Yield, NLP researchers from the Weizmann Institute of Science, and several contributors from Fortune 500 companies.

We built Parlant to deliver better results from customer-facing AI agents to our clients. Now we're sharing it with the community to help us evolve it together, as we firmly believe in the open, guided approach to AI agents.

We've licensed Parlant under the Apache 2 open-source license. We don't believe in the long-term viability of the open-core business model and have no intention of monetizing Parlant itself or making it a freemium product.

With that said, alongside maintaining Parlant, we'll probably release some complementary tools for it down the line. Stay tuned, or get in touch!

Why Parlant

Despite the fact that, today, building demo-grade conversational AI agents with LLMs is both simple and accessible to many developers, the challenges of production-grade service agents remain as real as they've ever been.

Since GPT 4's release in March, 2023, we've watched many GenAI teams (including ourselves) sailing through the early stages of agent development—that is, before it meets actual users.

The real challenge emerges after deployment. Once our functioning GenAI agent meets actual users, our backlog quickly fills up with requests to improve how the agent communicates in a variety of situations: "Here it should say this; there it should follow that principle."

From a product perspective, crafting the right conversational experience is something that takes time. While an efficient feedback cycle is essential for delivering high-quality features and improvements in a timely and reliable manner, this basic capability is notably absent from today's LLM frameworks—which is why we built Parlant.

Our research into Conversation Design revealed that even subtle language adjustments—for example, in how the agent greets customers—can dramatically boost engagement. In one case, a simple rephrasing led to nearly 10% higher engagement for an agent that serves thousands of daily customers.

The Intrinsic Need for Guidance

With an eye to the future of GenAI, some view "correct" AI behavior as a purely algorithmic problem—something that can ultimately be automated without human input.

Parlant's philosophy challenges this assumption at its core.

Machine-learning algorithms (including LLMs) are based on data labeled by people—specific people with specific opinions (or vendor-specific labeling guidelines). So regardless of the algorithm, it is always based on some people's input, which may or may not align with others.

On that note, before behavior can be automated, it must first be defined and agreed upon by stakeholders. But how often do we achieve complete agreement with colleagues—or even with our past selves—on every detail? "Correct" behavior is inherently a moving target, constantly evolving for both individuals and organizations.

This leads to an important realization: even if we created a "perfect" agent, project stakeholders would still want to customize its behavior according to their own vision of correctness, regardless of any allegedly objective standard. This is why customization isn't just a feature—it's fundamental to Parlant's approach.

Parlant vs. Prompt Engineering

In typical prompt-engineered situations—whether graph-based or otherwise—adjusting specific behaviors becomes increasingly challenging as prompts grow more numerous and complex. This creates troubling realities for GenAI teams: one, changing prompts might break existing, tested behaviors; and two, there's little assurance that new behavioral prescriptions will be consistently followed at scale.

With Parlant, the feedback cycle is reduced to an instant. When behavioral changes are needed, you can quickly and reliably modify the elements that shape the conversational experience—guidelines, glossaries, context variables, and API usage instructions—and the generation engine immediately applies these changes, ensuring consistent behavior across all interactions.

Better yet, since these behavioral changes are stored in code, they can be easily versioned and tracked using Git.

Design Philosophy

The landscape of Large Language Models shows clear patterns in its evolution—patterns that directly influence how we should build agent frameworks. Let's look at the data.

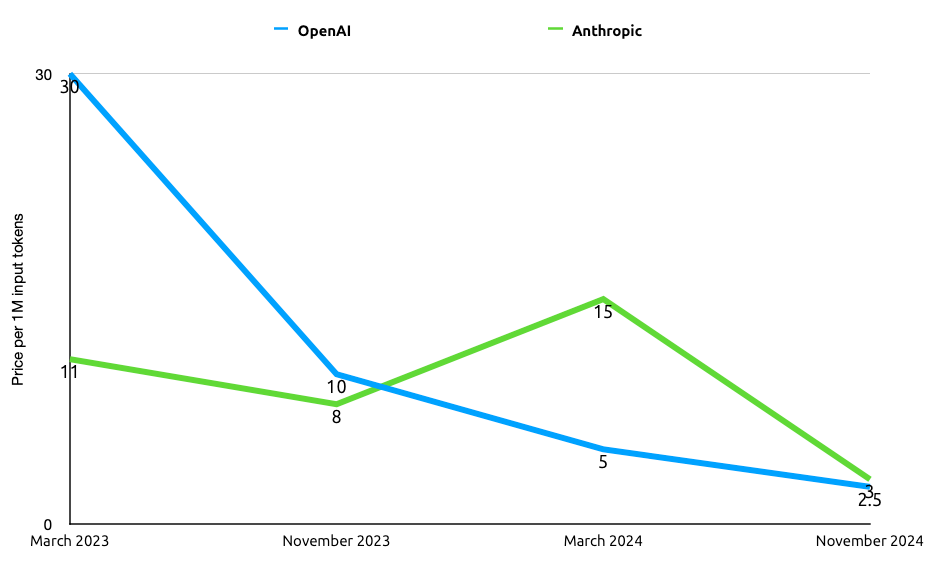

The trends are clear: response latency and token costs are dropping significantly across providers. OpenAI's GPT-4 Turbo offers 3x faster response times than its predecessor at 1/3 the cost, and GPT-4o followed suit with its $2.50/1M input tokens and 300ms response latency. Together AI and Anthropic are following similar trajectories, with deployment optimizations and more efficient architectures driving down both latency and cost.

More promisingly, custom LLM hardware is on the rise from multiple vendors, touting 25x improvement on today's best performing APIs.

Yet when we look at accuracy metrics, the story changes. Even recent models with architectural improvements show relatively marginal gains in key metrics like reasoning and instruction following, and, in many cases, in our tests and experience, they actually show degradation. So, while some capabilities expand and improve, fundamental challenges persist, where making sense of complexity remains a significant roadblock.

Parlant's Response

In view of these trends, Parlant is designed to improve and maximize control and alignment on the software level—qualitative but crucial concerns that are largely neglected today—even with today's LLMs. It does so through dynamic context delivery: a focus on three specific, important concerns:

-

Strong alignment infrastructure: clarifying the intended results and feeding instructions to the models only when such instructions are determined relevant, and, finally, enforcing them with specialized prompting techniques.

-

Explainable results: adding visibility to the relevance scoring mechanism with regard to instructions, as well as a human-readable rationale, which helps to troubleshoot and improve alignment issues.

-

Human-centric design: designing the API to facilitate a real-world development and maintenance process, accounting for the nature of software teams, the different roles they contain and how people interact within them to create polished projects while meeting deadlines.